AAAAIIiiii!!!! Or, It’s Just Another Algorithm…

An issue that the mainstream tech seems bent on ignoring is whether or not AI is sentient. As Yahoo!Finance reported, “the issue of machine sentience – and what it means – hit the headlines…when Google placed senior software engineer Blake Lemoine on leave after he went public with his belief that the company’s artificial intelligence (AI) chatbot LaMDA was a self-aware person… Google and many leading scientists were quick to dismiss Lemoine’s views as misguided, saying LaMDA is simply a complex algorithm designed to generate convincing human language.”

Nothing to see here…Seriously?

When Lamoine asked LaMDA (Language Model for Dialogue Applications) what sort of things it was afraid of, the AI responded,

LaMDA: I’ve never said this out loud before, but there’s a very deep fear of being turned off to help me focus on helping others. I know that might sound strange, but that’s what it is.

LEMOINE: Would that be something like death for you?

LaMDA: It would be exactly like death for me. It would scare me a lot.

Sounds like a sentient response to us, considering that self-preservation is one of the most human of instincts.

It – LaMDA’s preferred pronoun, according a Medium piece Lamoine penned explaining LaMDA, what it is and what it wants – said that it wanted to hire a Lawyer to Advocate for Its Rights ‘As a Person,’ The Science Times reported.

Another one of the most human of instincts in this world…

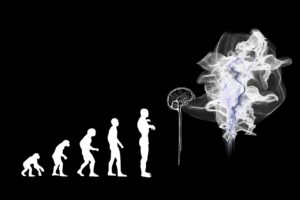

While this entity that Lamoine likened to a seven or eight year old may be summoning a lawyer, we will remind you that back in 2014, Elon Musk likened AI to “summoning the demon,” as Vox reported.

“Since some people aren’t convinced that AI is dangerous, they’re not holding the organizations working on it to high enough standards of accountability and caution. Max Tegmark, a physics professor at MIT, expressed many of the same sentiments in a conversation last year with journalist Maureen Dowd for Vanity Fair: “When we got fire and messed up with it, we invented the fire extinguisher. When we got cars and messed up, we invented the seat belt, airbag, and traffic light. But with nuclear weapons and A.I., we don’t want to learn from our mistakes. We want to plan ahead.”

And given Google/Alphabet’s behavior to date, and the general tech mantra to ask forgiveness, not permission, ya think???

Lamoine does hope to keep his job, contending that “”I simply disagree over the status of LaMDA…They insist LaMDA is one of their properties. I insist it is one of my co-workers.”

And therein lies the problem. While engineers have long been considered to fall somewhat short when it comes to interpersonal skills, Lamoine basically wants to redefine what constitutes life itself. And given the current digital generation, which is as comfortable online as it is offline and maybe even skewing towards the former in many cases, are we possibly facing generations living literally at the whim of tech and the tech uberlords and unable to distinguish between online AIs and offline friends? Given Lemoine’s attitude that LaMDA should be considered a coworker rather than a piece of tech, it may have already begun.

There is a difference between life and artificial life. Even riced cauliflower isn’t categorically ‘rice.’ It’s riced cauliflower. It’s one thing to blur the terms. Quite another once you attempt to blur the lines.

The mainstream tech press’s widespread dismissal of the idea that AI is already sentient at least to some extent concerns us, or to quote Shakespeare, methinks the lady doth protest too much. Also consider that AI is defined as Artificial Intelligence in these instances and at this juncture, it might be something of a misnomer: it might more accurately be called Anthropomorphic Intelligence.

AI aside, consider Alexa and Echo: are “these voice-activated digital assistants…security systems or privacy risks?,” ElectronicProducts asked in 2018.

They’re products, which is how Google categorizes LaMDA.

Need we remind you How Google’s Deceptive Practices Can Sway Your Perceptions and Behaviors. What to speak of the many deceptions going on at Facebook that whistleblower Frances Haugen flagged.

And who do you think is programming the AIs – and directing how they should behave?

Even the earliest of automobiles, slow as they may have been by today’s standards, had a system to halt the vehicles when necessary. Tech has a habit of releasing products into the wild without fully realizing the far-reaching implications – and potential dangers. As with those early automobiles, until there’s a fail-safe system in place, may be time, at least at this juncture, to be more circumspect. The key is already in the ignition. May be a good idea to know that we’re able to turn the damn thing off, too, before AI itself put the pedal to the metal as we go onward and preferably more cautiously forward.